Sound clips (wav, mp3, ogg, flac) from the movie Iron Man 2. Jarvis:'It appears that the continued use of the Iron Man suit is accelerating your condition.' Wav (58k) Mp3 (56k) iPhone (101k) Flac (618k) Share this clip. Jarvis:'Sir, the reactor has accepted the modified core. I will begin running diagnostics.' Jarvis voice assistant. GitHub Gist: instantly share code, notes, and snippets. The.WAV file is one of the simplest digital audio file formats and was developed back in 1991 by Microsoft and IBM for use within Windows 3.1. The WAV file has become a standard PC audio file format for everything from system and game sounds to CD quality audio. Also known as pulse code modulation (PCM) or waveform audio. Jarvisuploaded.wav Paul Bettany: 'I have indeed been uploaded, Sir. We're on-line and ready.' Jarviswish.wav Paul Bettany: 'As you wish.' Limo.wav Gwyneth Paltrow: 'There's a car waiting for you outside that will take you anywhere you'd like to go.' Mindright.wav Terrence Howard as Colonel James Rhodes: 'What you need is time to get your. Jarvis is a simple and easy-to-use voice recognition software whose main purpose is to open applications and execute specific commands by listening to your voice. Simply connect a microphone to.

Latest versionReleased:

JarvisAI is AI python library

Project description

Last Updated: 21 February, 2021

- What is Jarvis AI?

- Prerequisite

- Getting Started- How to use it?

- How to contribute?

- Future?

1. What is Jarvis AI-

Jarvis AI is a Python Module which is able to perform task like Chatbot, Assistant etc. It provides base functionality for any assistant application. This JarvisAI is built using Tensorflow, Pytorch, Transformers and other opensource libraries and frameworks. Well, you can contribute on this project to make it more powerful.

This project is crated only for those who is having interest in building Virtual Assistant. Generally it took lots of time to write code from scratch to build Virtual Assistant. So, I have build an Library called 'JarvisAI', which gives you easy functionality to build your own Virtual Assistant.

Check more details here- https://github.com/Dipeshpal/Jarvis_AI

2. Prerequisite-

- To use it only Python (> 3.6) is required.

- To contribute in project: Python is the only prerequisite for basic scripting, Machine Learning and Deep Learning knowledge will help this model to do task like AI-ML. Read How to contribute section of this page.

3. Getting Started (How to use it)-

Install the latest version-

pip install JarvisAI

It will install all the required package automatically.

If anything not install then you can install requirements manually.pip install -r requirements.txtThe requirementx.txt can be found here.

Usage and Features-

After installing the library you can import the module-

Check this script for more examples-https://github.com/Dipeshpal/Jarvis-Assisant/blob/master/scripts/main.py

Available Methods-

The functionality is cleared by methods name. You can check the code for example. These are the names of available functions you can use after creating JarvisAI's object-

Note:First of all setup initial settings of the project by calling setup function.

- res = obj.mic_input(lang='en')

- res = obj.mic_input_ai(record_seconds=5, debug=False)

- res = obj.website_opener('facebook')

- res = obj.send_mail(sender_email=None, sender_password=None, receiver_email=None, msg='Hello')

- res = obj.launch_app('edge')

- res = obj.weather(city='Mumbai')

- res = obj.news()

- res = obj.tell_me(topic='tell me about Taj Mahal')

- res = obj.tell_me_time()

- res = obj.tell_me_date()

- res = obj.shutdown()

- res = obj.text2speech(text='Hello, how are you?',)

- res = obj.datasetcreate(dataset_path='datasets', class_name='Demo',haarcascade_path='haarcascade/haarcascade_frontalface_default.xml',eyecascade_path='haarcascade/haarcascade_eye.xml', eye_detect=False,save_face_only=True, no_of_samples=100,width=128, height=128, color_mode=False)

- res = obj.face_recognition_train(data_dir='datasets', batch_size=32, img_height=128, img_width=128, epochs=10,model_path='model', pretrained=None, base_model_trainable=False)

- res = obj.predict_faces(class_name=None, img_height=128, img_width=128,haarcascade_path='haarcascade/haarcascade_frontalface_default.xml',eyecascade_path='haarcascade/haarcascade_eye.xml', model_path='model',color_mode=False)

- res = obj.setup()

- res = obj.show_me_my_images()

- res= show_google_photos()

- res = tell_me_joke(language='en', category='neutral')

- res = hot_word_detect(lang='en')

4. How to contribute?

Clone this reop

Create virtual environment in python.

Install requirements from requirements.txt.

pip install requirements.txtNow run, __ init__.py

python __init__.pyand understand the working.Guidelines to add your own scripts / modules-Lets understand the projects structure first-

4.1. All these above things are folders. Lets understand-

JarvisAI: Root folder containing all the files

features: All the features supported by JarvisAI. This 'features' folder contains the different modules, you can create your own modules. Example of modules- 'weather', 'setup'. These are the two folders inside 'features' directory.

__ init__.py: You need to run this file to test it during the production.

4.2. You can create your own modules in this 'features' directory.

4.3. Let's create a module and you can learn by example-

4.3.1. We will create a module which will tell us a date and time.

4.3.2. Create a folder (module) name- 'date_time' in features directory.

4.3.3. Create a python script name- 'date_time.py' in 'date_time' folder.

4.3.4. Write this kind of script (you can modify according to your own script).Read comments in script below to understand format-

'features/date_time/date_time.py' file-Make sure to add docs / comments. Also return value if necessary.4.3.4. Integrate your module to Jarvis AI-

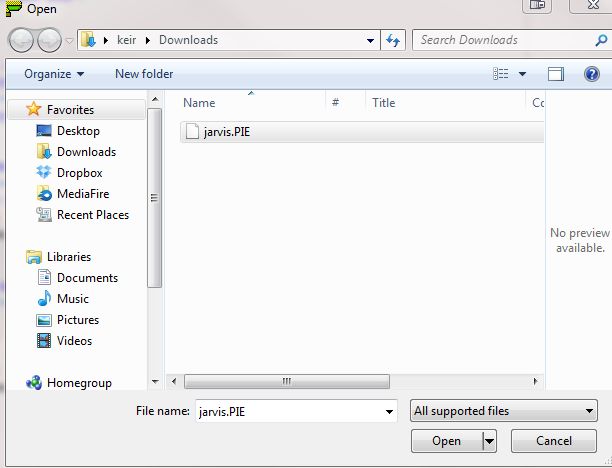

Open

JarvisAIJarvisAI__init__.pyFormat of this py file-

4.4. That's it, if you applied all the things as per as guidelines then now just run __ init__.py it should works fine.

4.5. Push the repo, we will test it. If found working and good then it will be added to next PyPi version.

Next time you can import your created function from JarvisAIExample: import JarvisAI.tell_me_date

5. Future?

Lots of possibilities, GUI, Integrate with GPT-3, support for android, IOT, Home Automation, APIs, as pip package etc.

FAQs for Contributors-

What I can install?Ans: You can install any library you want in your module, make sure it is opensource and compatible with win/linux/mac.

Code format?Ans: Read the example above. And make sure your code is compatible with win/linux/mac.

What should I not change?Ans: Existing code.

Credits-Ans: You will definitely get credit for your contribution.

Note-Ans: Once you created your module, test it with different environment (windows / linux). Make sure the quality of code because your features will get added to the JarvisAI and publish as PyPi project.

Help / Contact?Ans. Contact me on any of my social media or Email.

Let's make it big.

Feel free to use my code, don't forget to mention credit.All the contributors will get credits in this repo.

Release historyRelease notifications | RSS feed

0.2.8

0.2.7

0.2.6

0.2.5

0.2.4

0.2.3

0.2.2

0.2.1

0.2.0

0.1.9

0.1.8

0.1.7

0.1.6

0.1.5

0.1.4

0.1.3

0.1.2

0.1.1

0.1.0

0.0.9

0.0.7

0.0.6

0.0.5

0.0.4

0.0.3

0.0.2

0.0.1

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

| Filename, size | File type | Python version | Upload date | Hashes |

|---|---|---|---|---|

| Filename, size JarvisAI-0.2.8-py3-none-any.whl (29.5 kB) | File type Wheel | Python version py3 | Upload date | Hashes |

| Filename, size JarvisAI-0.2.8.tar.gz (16.5 kB) | File type Source | Python version None | Upload date | Hashes |

Hashes for JarvisAI-0.2.8-py3-none-any.whl

| Algorithm | Hash digest |

|---|---|

| SHA256 | cd264a364b494da123c58ef402ee47ad78609c9d8cfc43b20c26668faa832870 |

| MD5 | 89670c5af65057e9bbc4b8796841f251 |

| BLAKE2-256 | 1d22b10bbc7f5b698a38084b1c5a9052800aad194dffd41e07b54e55363f8996 |

Hashes for JarvisAI-0.2.8.tar.gz

| Algorithm | Hash digest |

|---|---|

| SHA256 | 341516b8305faff076ccaee5bbccb32415e55e402d62653ef9bfac8b1e456a86 |

| MD5 | c4fad42b496535279f8441c8a238438d |

| BLAKE2-256 | d0ea6581b218355314ca5cee2b9065609615ae618a9a5f803684465ed1370bc0 |

This Jarvis Speech Skills Quick Start Guide is a starting point to try out Jarvis; specifically, this guide enables you to quicklydeploy pretrained models on a local workstation and run a sample client.

For more information and questions, visit the NVIDIA Jarvis Developer Forum.

Prerequisites¶

Before you begin using Jarvis AI Services, it’s assumed that you meet the following prerequisites.

You have access and are logged into NVIDIA GPU Cloud (NGC). For step-by-step instructions, see theNGC Getting Started Guide.

You have access to a Volta, Turing, or an NVIDIA Ampere arcitecture-based A100 GPU. For more information, see theSupport Matrix.

You have Docker installed with support for NVIDIA GPUs. For more information, see the Support Matrix.

Models Available for Deployment¶

To deploy Jarvis AI Services, there are two options:

Option 1: You can use the Quick Start scripts to set up a local workstation and deploy the Jarvis services using Docker. Continuewith this section to use the Quick Start scripts.

Option 2: You can use a Helm chart. Included in the NGC Helm Repository is a chartdesigned to automate the steps for push-button deployment to a Kubernetes cluster. For details, see Kubernetes.

When using either of the push-button deployment options, Jarvis uses pre-trained models from NGC. You can also fine-tune custommodels with NVIDIA NeMo. Creating a model repository using a fine-tuned model trainedin NeMo is a more advanced approach to generating a model repository.

Local Deployment using Quick Start Scripts¶

Jarvis includes Quick Start scripts to help you get started with Jarvis AI Services. These scripts are meant for deploying theservices locally for testing and running the example applications.

Go to Jarvis Quick Start and select the File Browser tabto download the scripts or download them via the command-line with the NGC CLI tool by running:

Initialize and start Jarvis. The initialization step downloads and prepares Docker images and models. The start script launches theserver.

Within the

quickstartdirectory, modify theconfig.shfile with your preferred configuration. Options include whichmodels to retrieve from NGC, where to store them, and which GPU to use if more than one is installed in your system (seeLocal (Docker) for more details).Note

This process can take quite a while depending on the speed of your Internet connection and number of models

deployed. Each model is individually optimized for the target GPU after download.

Start a container with sample clients for each service.

From inside the client container, try the different services using the provided Jupyter notebooks.

For further details on how to customize a local deployment, see Local Deployment (Docker).

Running the Jarvis Client and Transcribing Audio Files¶

For ASR, run the following commands from inside the Jarvis Client container to perform streaming and offline transcription of audiofiles.

For offline recognition, run:

jarvis_asr_client--audio_file=/work/wav/sample.wavFor streaming recognition, run:

jarvis_streaming_asr_client--audio_file=/work/wav/sample.wav

Running the Jarvis Client and Converting Text to Audio Files¶

From within the Jarvis client container, synthesize the audio files by running:

Jarvis Voice Sound Files Software

The audio files are stored in the /work/wav directory.

Jarvis Voice Sound Files Free

The streaming API can be tested by using the command-line option --online=true. However, there is no difference between bothoptions with the command-line client since it saves the entire audio to a .wav file.